Checking out Sentinel Data Lake

Ever since the announcement of Sentinel Data Lake I wanted to try it!

Introduction

Data Lake sounds like it's going to revolutionize the usage of Sentinel (and I really dislike using big words, but I really think so). Data Lake costs about 1% of Analytics Logs, which would at last make it possible to simple ingest all security logs to Sentinel and then pick out what's needed for Analytics. I'm a big fan of being able to really consolidate security logs in a single source, it's a big time-saver for incident response and forensics.

Below are my thoughts and experiences trying Data Lake.

Setup

The setup is fairly simple and I won't go into detail right now. Just follow Microsoft's documentation.

There are only 2 anecdotes to the official documentation:

Regions

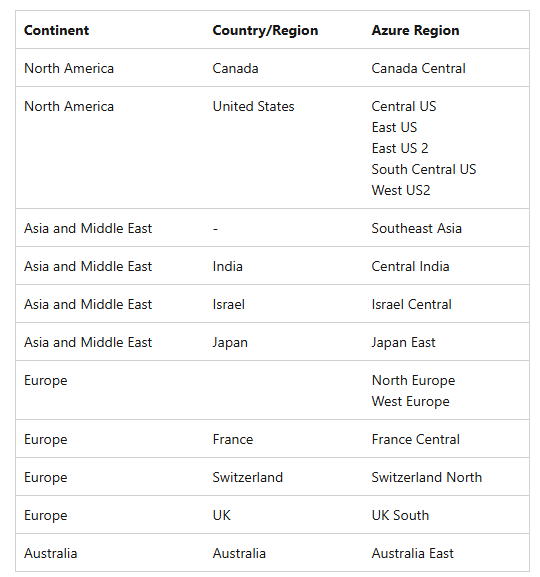

According to Microsoft, only some regions are supported:

You have to keep that in mind, because you simply can't deploy Data Lake if your Sentinel instance is in another region. And as you might now, Sentinel is one of the Azure resources, that can't be moved natively. Moving it manually is possible but a major PITA.

Permissions

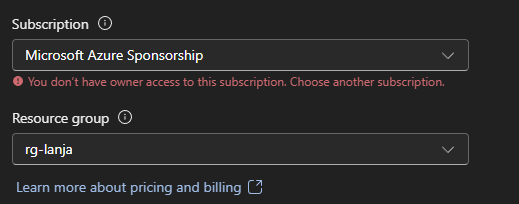

The docs simply state the following regarding Azure permissions:

You must be the subscription owner.

Now this is only half true, because - for whatever reason - you will get a permission error if your Owner permissions are inherited:

As soon as you assign the

As soon as you assign the Owner permission directly to the user, onboarding works.

Warning

Don't use inerhited permissions for Data Lake Onboarding

Success

Use directly assigned Owner permissions

Though do consider removing them after onboarding is done if you don't need them.

Usage

Data Lake Explorer

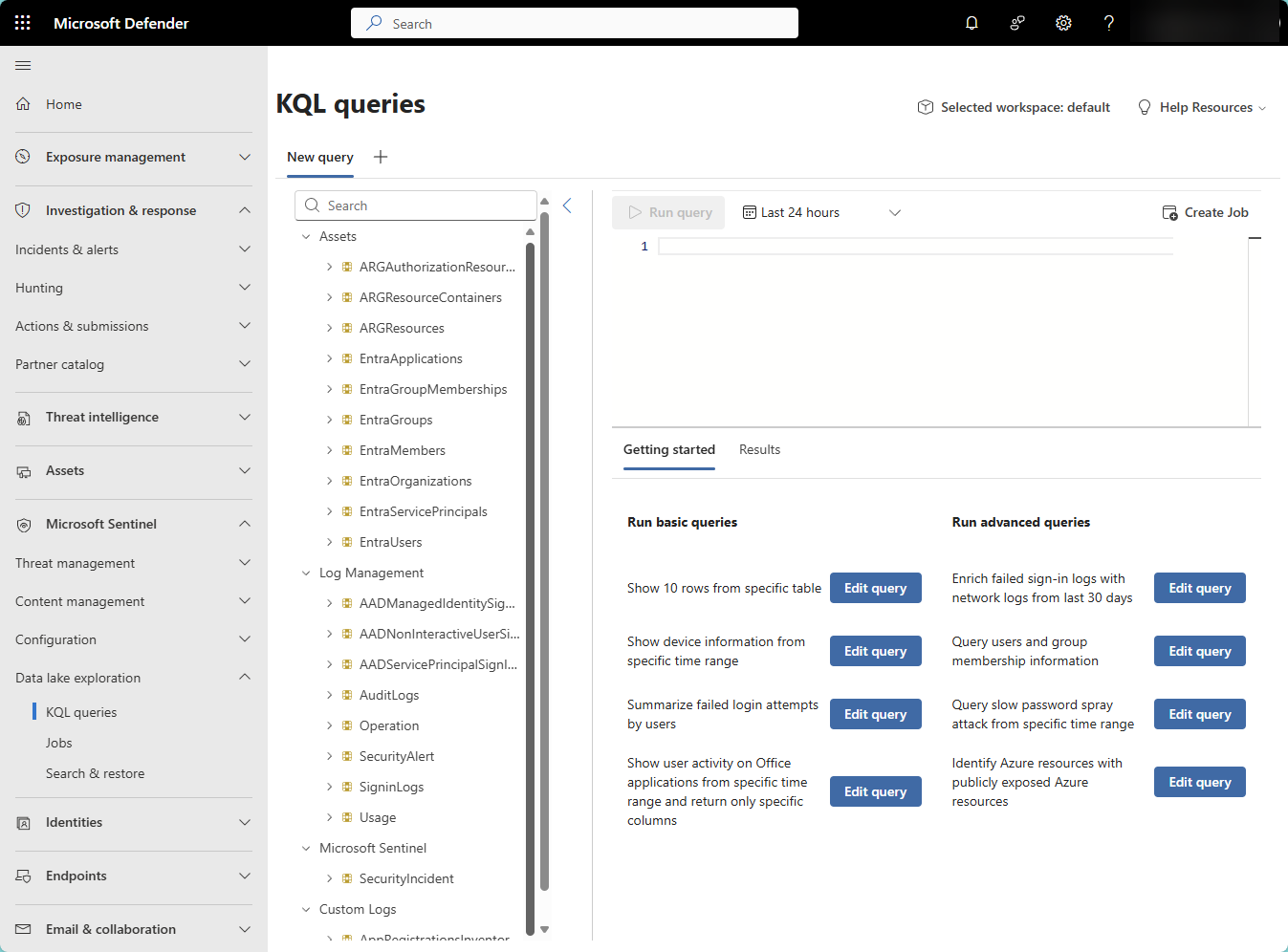

Located in the Sidebar under Microsoft Sentinel --> Data lake exploration --> KQL queries, you can set off queries against your Data Lake logs here.

Duplication

All data ingested into the Analytics Logs is automatically duplicated into Data Lake - for no additional cost. So you can theoretically query it here as well. Do keep in mind though, that querying Data Lake is a billable action, whereas querying Analytics Logs is always free!

Inventory Logs

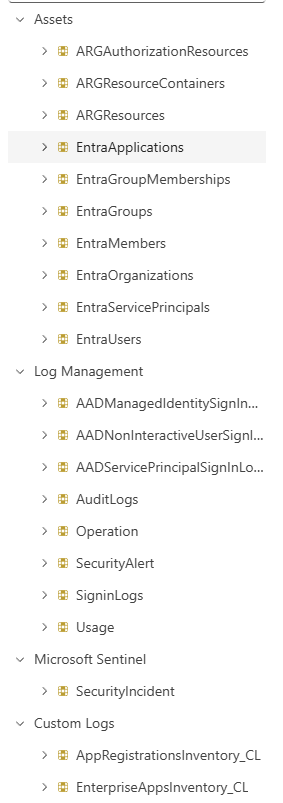

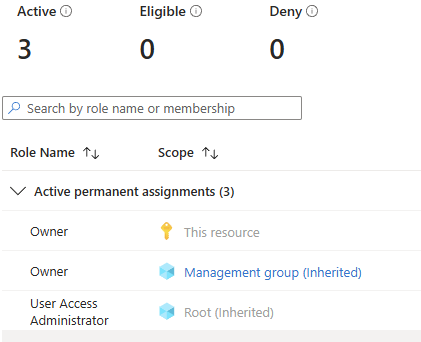

In addition to the transferred Analytics Logs, Microsoft - at last - provides some inventory information from Entra ID. Until now that was something that was always missing, especially for correlations. In the below screenshot you can see all the native table, as well as two custom log tables in the bottom (which are Analytics Logs).

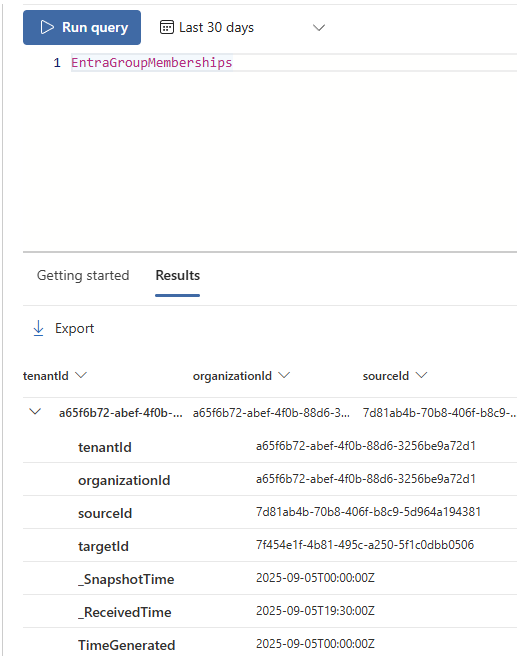

Though right now some of the data is not really usable, e.g. the EntraGroupMembershiphs doesn't really contain any relevant information.

Jobs

It's a funny name, just Jobs 😁

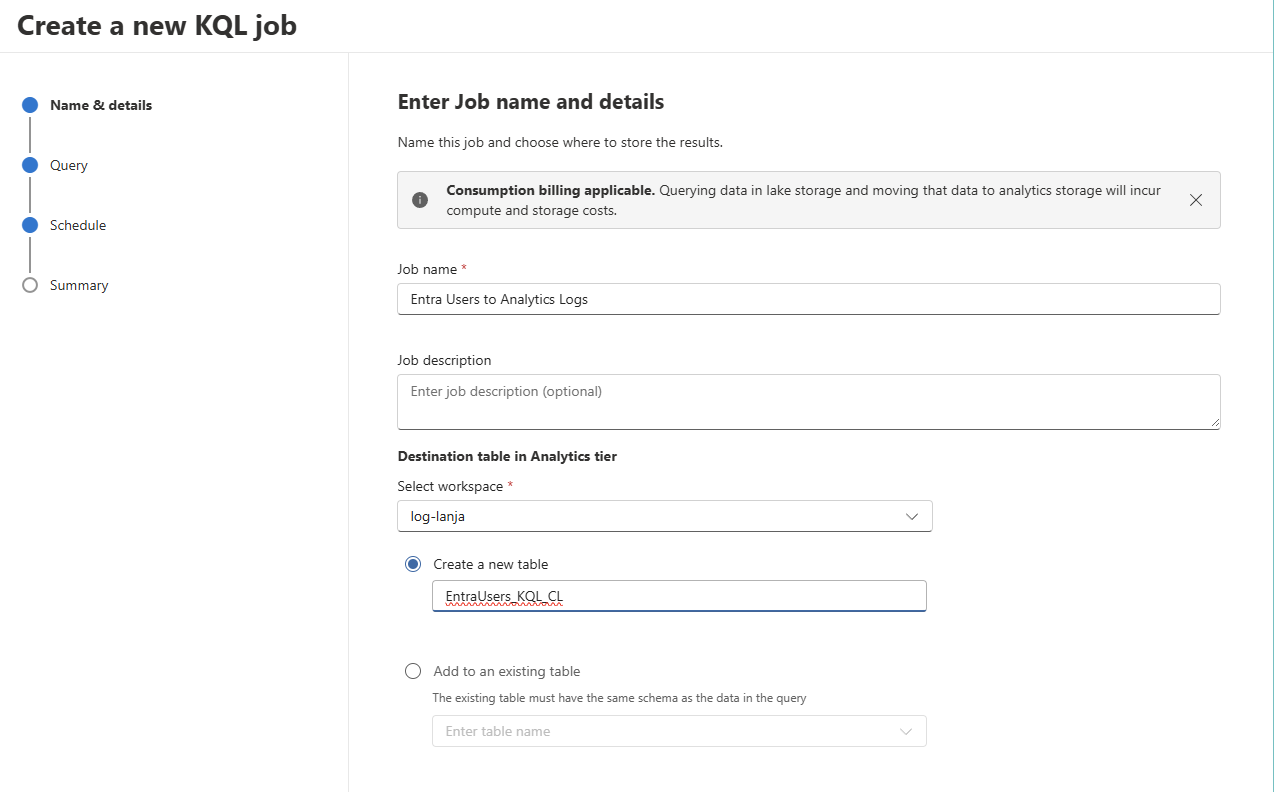

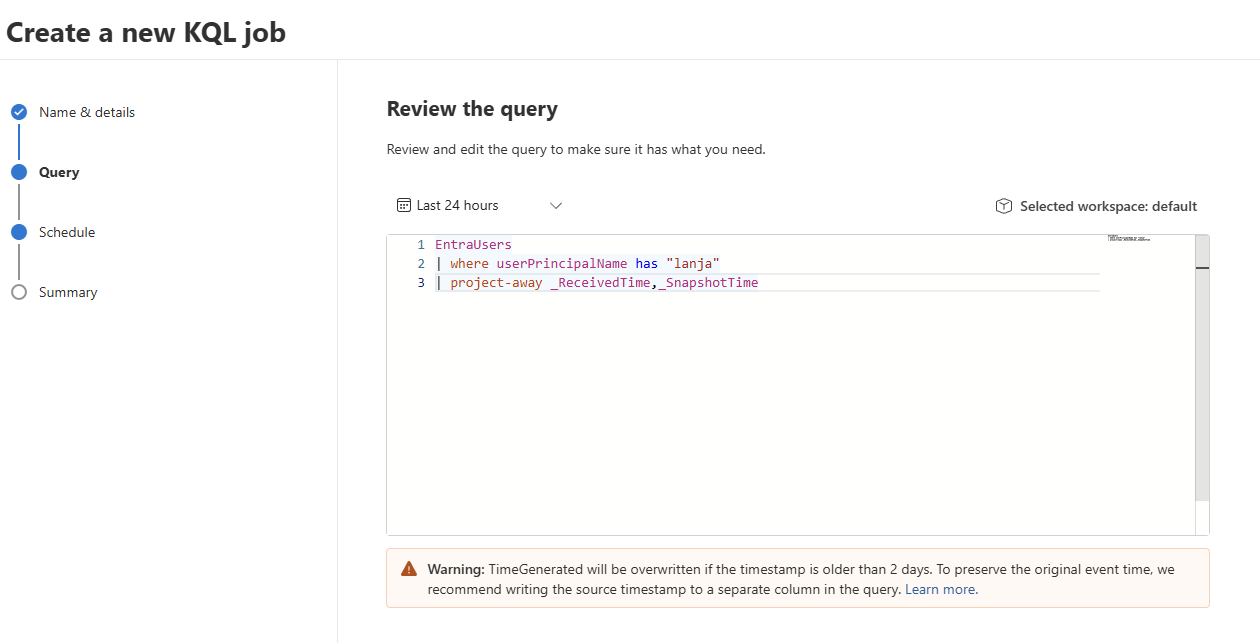

Anyway, it's essentially what Summary Rules used to be: You create a KQL query and all matching results are copied to a selected target table. This table can be in the Analytics Logs tier, so you effectively "promote" your logs.

Considerations

Custom Table name

As you can see in the screenshot above, the newly created custom table gets the suffix _KQL_CL which is an addition to the general requirement that custom tables have to end in _CL. I'm not sure why it is done that way, but since creating a custom table is quite the hassle in my opinion, I'll just take it.

Column names and special characters

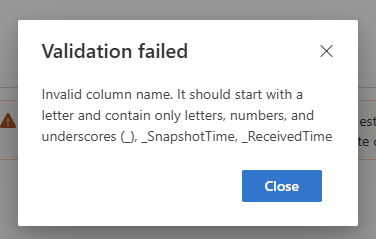

Now funny enough, the built-in tables I mentioned in Inventory Logs have columns which start with an underscore _ and Jobs don't support that.

The error message is pretty cryptic until you find out that _SnapshotTime and _ReceivedTime are the columns that can't be added. So just use project-away or similar to get rid of them.

Timing

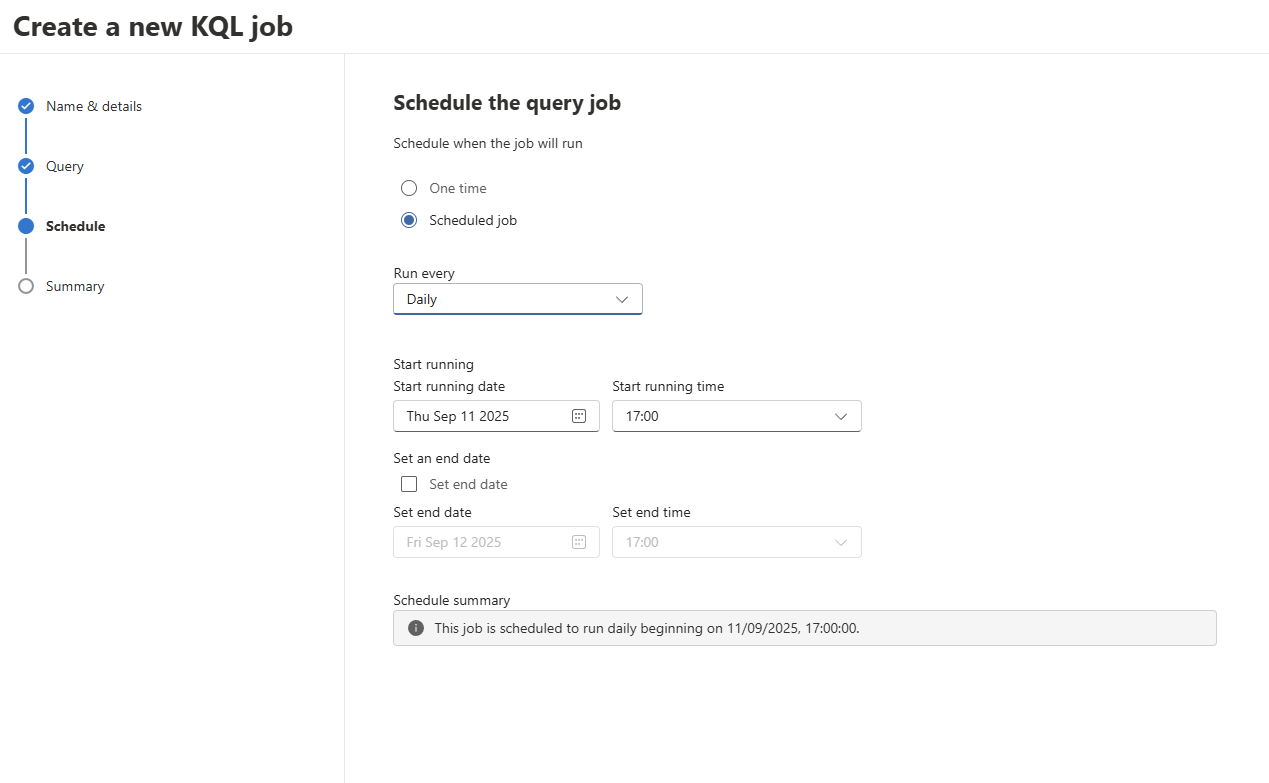

Jobs can only run daily at most - at least currently. Summary Rules could run every 20 minutes. So keep that in mind. For now this makes Jobs not suitable for near-real-time alerting (something that a SIEM should do in my opinion).

Verdict

After trying Data Lake, I still think it will be revolutionary (still don't like the sound of that). BUT there are some caveats that need to be fixed for Data Lake:

- Jobs need to be able to run more often. 5 minutes at minimum in my opinion. Or another option needs to be provided, but I don't want to go around splitting logs before ingest to get fast alerts and cost savings.

- The inventory data needs to be expanded, about half of the current tables are essentially worthless

- Some insights into accrued costs regarding Data Lake querying would be nice. I want to be able to see how much I've racked up by querying Data Lake, since that's not free. So I'm really excited to see where Data Lake goes during the preview or at launch. It could be a game changer or just good, that depends on the points above.